gptel

A simple LLM client for Emacs

gptel is a simple Large Language Model chat client for Emacs, with support for many models and backends. It works in the spirit of Emacs, available at any time and uniformly in any buffer.

gptel is available in any context:

- Interact with LLMs from anywhere in Emacs (any buffer, shell, minibuffer, wherever).

- Carry on multiple independent conversations, one-off, ad hoc interactions or anything in between.

but unobtrusive: it stays out of your way until you need it.

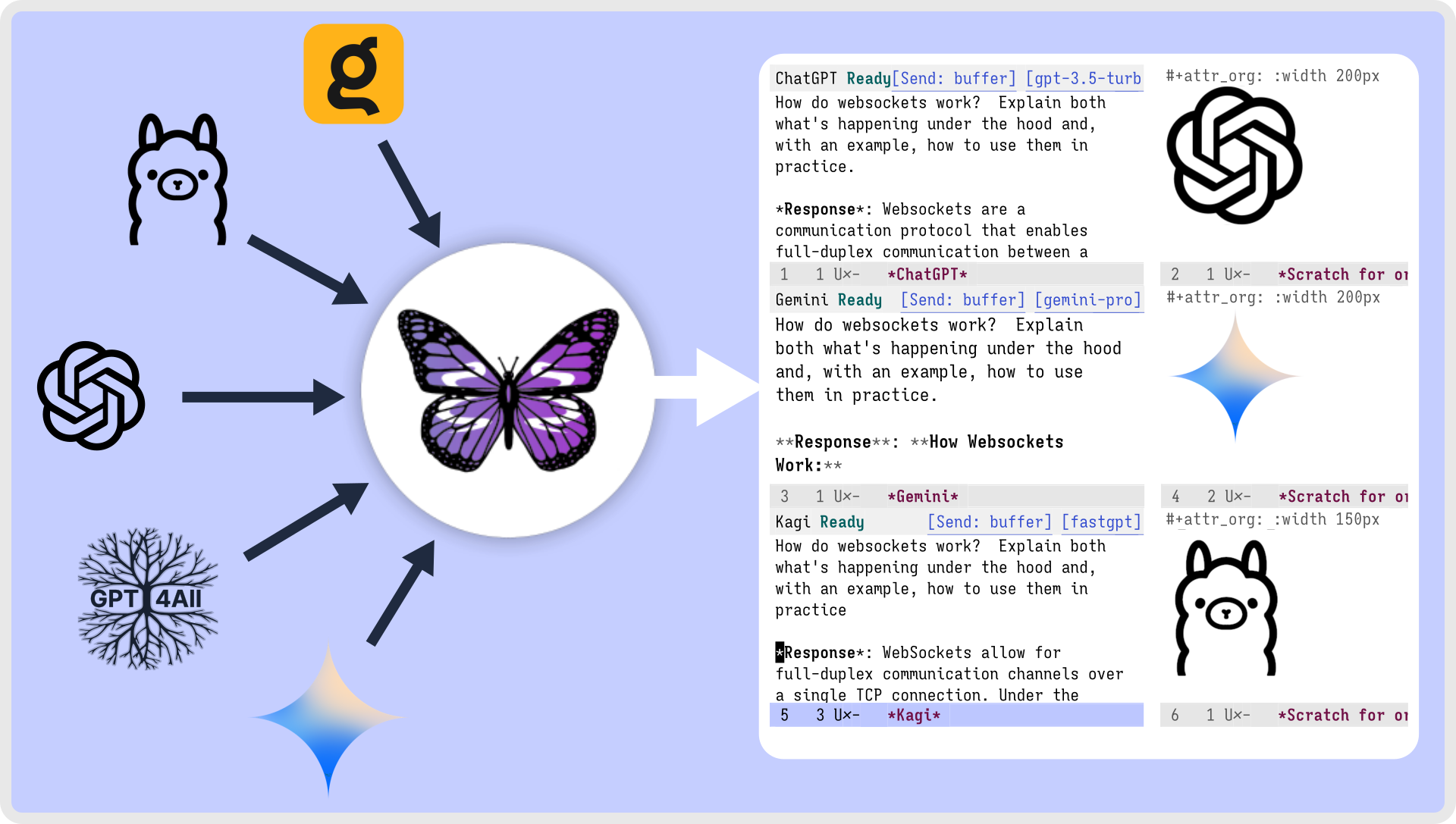

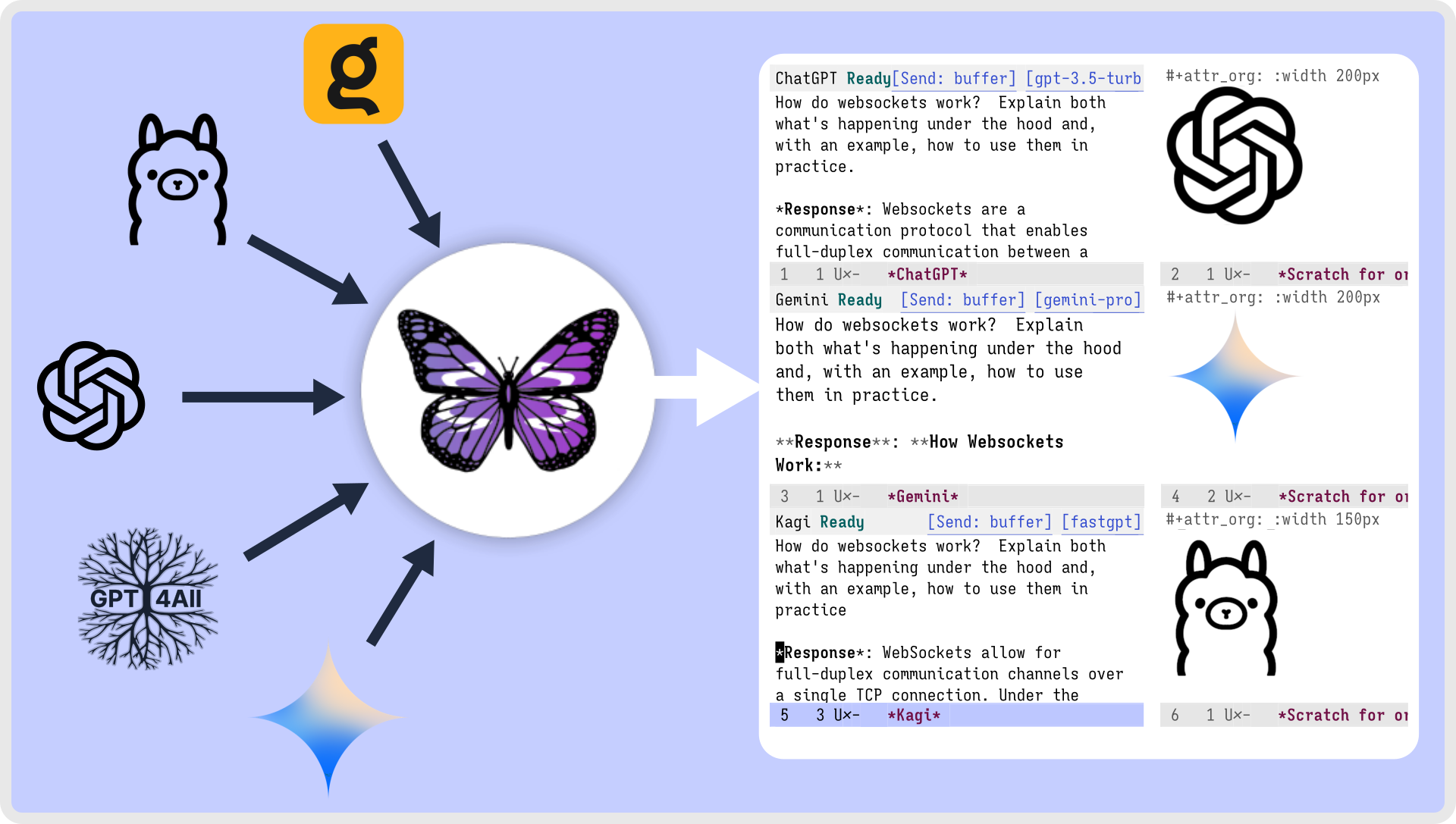

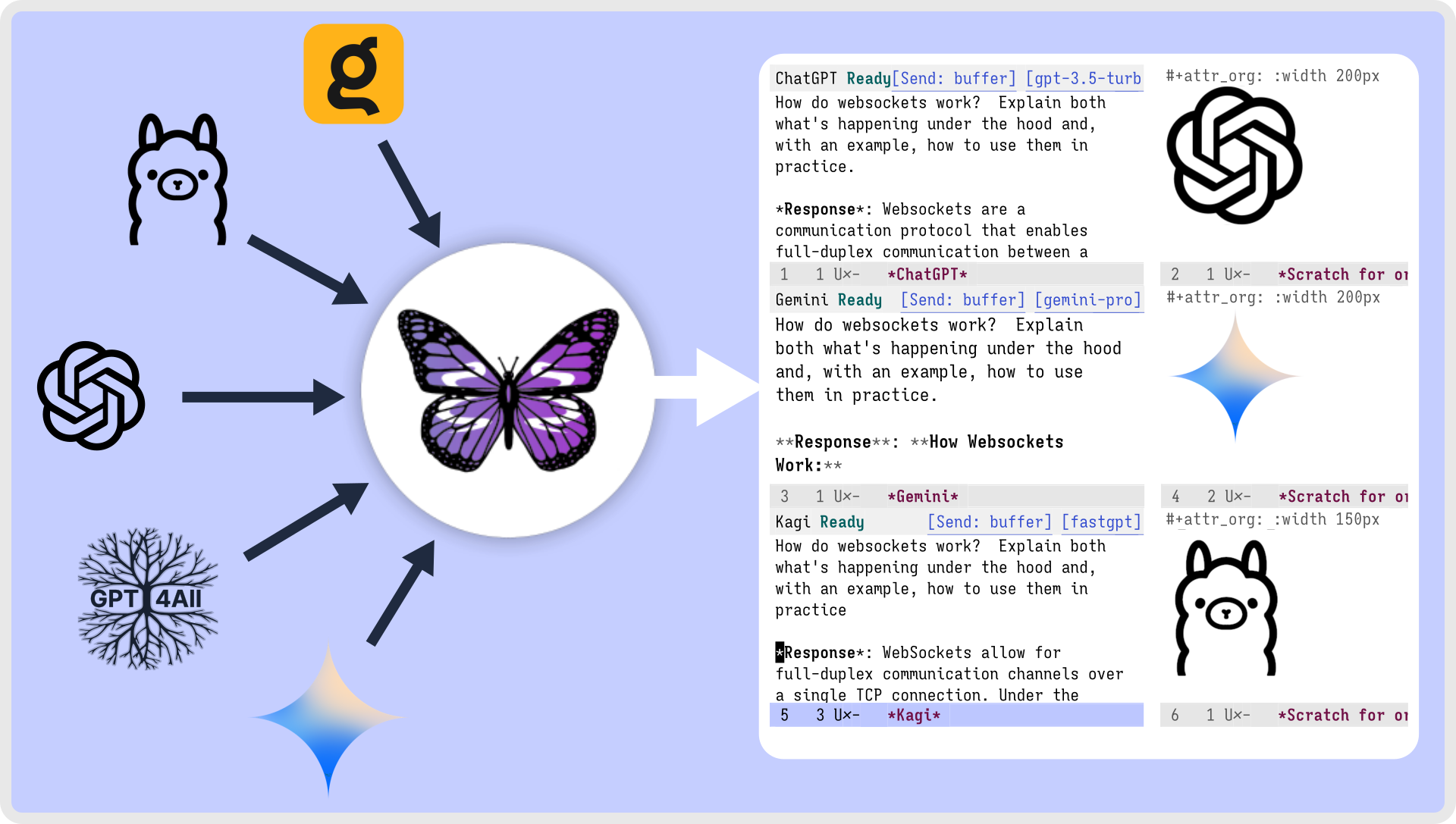

gptel can talk to most LLM services/runners:

- ChatGPT (OpenAI), Claude (Anthropic), Gemini (Google), Llama.cpp and Ollama models,

- but also Perplexity, Kagi, Deepseek, Azure, GitHub Copilot (chat), xAI and AWS Bedrock,

- and nearly every other inference provider or proxy: Openrouter, PrivateGPT, GitHub Models, Groq, Cerebras, Mistral Le Chat, Moonshot, Anyscale, Sambanova, Novita AI, ...

gptel can use LLMs as agents:

- Supports tool-use to equip LLMs with agentic capabilities.

- Supports Model Context Protocol (MCP) integration using mcp.el.

gptel is featureful, it can:

- stream responses, and will never block Emacs. (If you know you know!)

- handle multi-modal input — include images and documents with queries.

- handle "reasoning" content in LLM responses.

Chats are just regular Emacs buffers. This means you can:

- save chats as regular Markdown/Org/Text files and resume them later.

- edit your previous prompts or LLM responses when continuing a conversation. These will be fed back to the model.

- use gptel seamlessly in Org-mode.

gptel is transparent and composable.

- It supports introspection, so you can see exactly what will be sent. Inspect and modify queries before sending them.

- Pause multi-stage requests at an intermediate stage and resume them later.

- Don't like gptel's workflow? gptel is fully scriptable. Use it to create your own for any supported model/backend with a simple API.